#Write a fortran program

Explore tagged Tumblr posts

Text

The Evolution of Programming Paradigms: Recursion’s Impact on Language Design

“Recursion, n. See Recursion.” -- Ambrose Bierce, The Devil’s Dictionary (1906-1911)

The roots of programming languages can be traced back to Alan Turing's groundbreaking work in the 1930s. Turing's vision of a universal computing machine, known as the Turing machine, laid the theoretical foundation for modern computing. His concept of a stack, although not explicitly named, was an integral part of his model for computation.

Turing's machine utilized an infinite tape divided into squares, with a read-write head that could move along the tape. This tape-based system exhibited stack-like behavior, where the squares represented elements of a stack, and the read-write head performed operations like pushing and popping data. Turing's work provided a theoretical framework that would later influence the design of programming languages and computer architectures.

In the 1950s, the development of high-level programming languages began to revolutionize the field of computer science. The introduction of FORTRAN (Formula Translation) in 1957 by John Backus and his team at IBM marked a significant milestone. FORTRAN was designed to simplify the programming process, allowing scientists and engineers to express mathematical formulas and algorithms more naturally.

Around the same time, Grace Hopper, a pioneering computer scientist, led the development of COBOL (Common Business-Oriented Language). COBOL aimed to address the needs of business applications, focusing on readability and English-like syntax. These early high-level languages introduced the concept of structured programming, where code was organized into blocks and subroutines, laying the groundwork for stack-based function calls.

As high-level languages gained popularity, the underlying computer architectures also evolved. James Hamblin's work on stack machines in the 1950s played a crucial role in the practical implementation of stacks in computer systems. Hamblin's stack machine, also known as a zero-address machine, utilized a central stack memory for storing intermediate results during computation.

Assembly language, a low-level programming language, was closely tied to the architecture of the underlying computer. It provided direct control over the machine's hardware, including the stack. Assembly language programs used stack-based instructions to manipulate data and manage subroutine calls, making it an essential tool for early computer programmers.

The development of ALGOL (Algorithmic Language) in the late 1950s and early 1960s was a significant step forward in programming language design. ALGOL was a collaborative effort by an international team, including Friedrich L. Bauer and Klaus Samelson, to create a language suitable for expressing algorithms and mathematical concepts.

Bauer and Samelson's work on ALGOL introduced the concept of recursive subroutines and the activation record stack. Recursive subroutines allowed functions to call themselves with different parameters, enabling the creation of elegant and powerful algorithms. The activation record stack, also known as the call stack, managed the execution of these recursive functions by storing information about each function call, such as local variables and return addresses.

ALGOL's structured approach to programming, combined with the activation record stack, set a new standard for language design. It influenced the development of subsequent languages like Pascal, C, and Java, which adopted stack-based function calls and structured programming paradigms.

The 1970s and 1980s witnessed the emergence of structured and object-oriented programming languages, further solidifying the role of stacks in computer science. Pascal, developed by Niklaus Wirth, built upon ALGOL's structured programming concepts and introduced more robust stack-based function calls.

The 1980s saw the rise of object-oriented programming with languages like C++ and Smalltalk. These languages introduced the concept of objects and classes, encapsulating data and behavior. The stack played a crucial role in managing object instances and method calls, ensuring proper memory allocation and deallocation.

Today, stacks continue to be an integral part of modern programming languages and paradigms. Languages like Java, Python, and C# utilize stacks implicitly for function calls and local variable management. The stack-based approach allows for efficient memory management and modular code organization.

Functional programming languages, such as Lisp and Haskell, also leverage stacks for managing function calls and recursion. These languages emphasize immutability and higher-order functions, making stacks an essential tool for implementing functional programming concepts.

Moreover, stacks are fundamental in the implementation of virtual machines and interpreters. Technologies like the Java Virtual Machine and the Python interpreter use stacks to manage the execution of bytecode or intermediate code, providing platform independence and efficient code execution.

The evolution of programming languages is deeply intertwined with the development and refinement of the stack. From Turing's theoretical foundations to the practical implementations of stack machines and the activation record stack, the stack has been a driving force in shaping the way we program computers.

How the stack got stacked (Kay Lack, September 2024)

youtube

Thursday, October 10, 2024

#turing#stack#programming languages#history#hamblin#bauer#samelson#recursion#evolution#fortran#cobol#algol#structured programming#object-oriented programming#presentation#ai assisted writing#Youtube#machine art

3 notes

·

View notes

Note

i'm curious about something with your conlang and setting during the computing era in Ebhorata, is Swädir's writing system used in computers (and did it have to be simplified any for early computers)? is there a standard code table like how we have ascii (and, later, unicode)? did this affect early computers word sizes? or the size of the standard information quanta used in most data systems? ("byte" irl, though some systems quantize it more coarsely (512B block sizes were common))

also, what's Zesiyr like? is it akin to fortran or c or cobol, or similar to smalltalk, or more like prolog, forth, or perhaps lisp? (or is it a modern language in setting so should be compared to things like rust or python or javascript et al?) also also have you considered making it an esolang? (in the "unique" sense, not necessarily the "difficult to program in" sense)

nemmyltok :3

also small pun that only works if it's tɔk or tɑk, not toʊk: "now we're nemmyltalking"

so...i haven't worked much on my worldbuilding lately, and since i changed a lot of stuff with the languages and world itself, the writing systems i have are kinda outdated. I worked a lot more on the ancestor of swædir, ntsuqatir, and i haven't worked much on its daughter languages, which need some serious redesign.

Anyway. Computers are about 100 years old, give or take, on the timeline where my cat and fox live. Here, computers were born out of the need for long-distance communication and desire for international cooperation in a sparsely populated world, where the largest cities don't have much more than 10,000 inhabitants, are set quite far apart from each other with some small villages and nomadic and semi-nomadic peoples inbetween them. Computers were born out of telegraph and radio technology, with the goal of transmitting and receiving text in a faster, error-free way, which could be automatically stored and read later, so receiving stations didn't need 24/7 operators. So, unlike our math/war/business machines, multi-language text support was built in from the start, while math was a later addition.

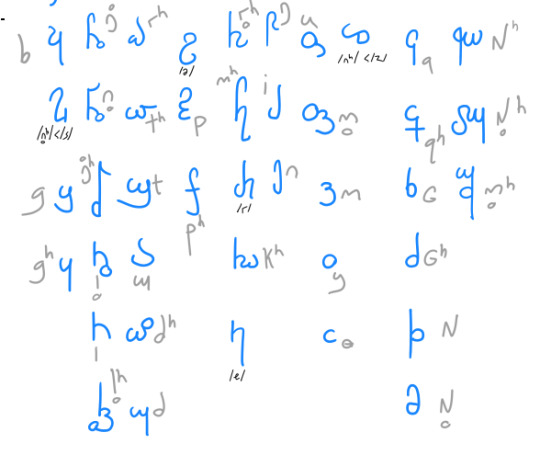

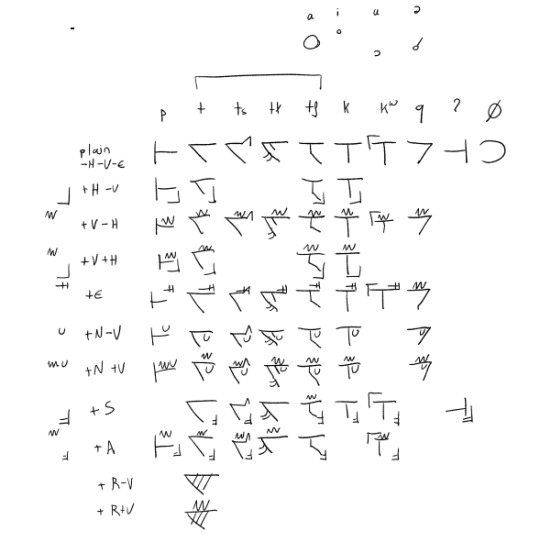

At the time of the earliest computers, there was a swædir alphabet which descended from the earlier ntsuqatir featural alphabet:

the phonology here is pretty outdated, but the letters are the same, and it'd be easy to encode this. Meanwhile, the up-to-date version of the ntsuqatir featural alphabet looks like this:

it works like korean, and composing characters that combine the multiple components is so straightforward i made a program in shell script to typeset text in this system so i could write longer text without drawing or copying and pasting every character. At the time computers were invented, this was used mostly for ceremonial purposes, though, so i'm not sure if they saw any use in adding it to computers early on.

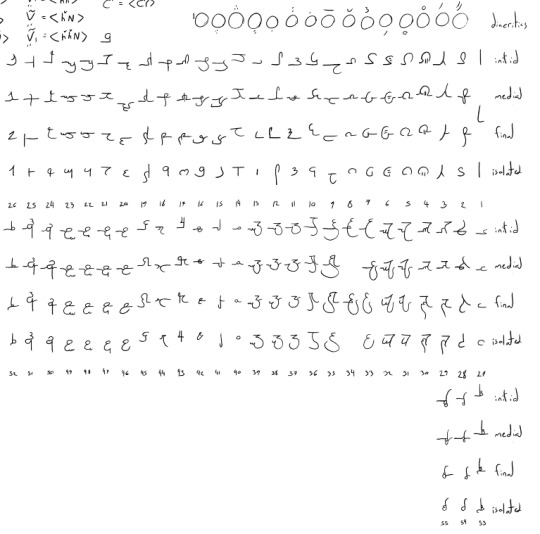

The most common writing system was from the draconian language, which is a cursive abjad with initial, medial, final and isolated letter shapes, like arabic:

Since dragons are a way older species and they really like record-keeping, some sort of phonetic writing system should exist based on their language, which already has a lot of phonemes, to record unwritten languages and describe languages of other peoples.

There are also languages on the north that use closely related alphabets:

...and then other languages which use/used logographic and pictographic writing systems.

So, since computers are not a colonial invention, and instead were created in a cooperative way by various nations, they must take all of the diversity of the world's languages into account. I haven't thought about it that much, but something like unicode should have been there from the start. Maybe the text starts with some kind of heading which informs the computer which language is encoded, and from there the appropriate writing system is chosen for that block of text. This would also make it easy to encode multi-lingual text. I also haven't thought about anything like word size, but since these systems are based on serial communication like telegraph, i guess word sizes should be flexible, and the CPU-RAM bus width doesn't matter much...? I'm not even sure if information is represented in binary numbers or something else, like the balanced ternary of the Setun computer

As you can see, i have been way more interested in the anthropology and linguistics bits of it than the technological aspects. At least i can tell that printing is probably done with pen plotters and matrix printers to be able to handle the multiple writing systems with various types of characters and writing directions. I'm not sure how input is done, but i guess some kind of keyboard works mostly fine. More complex writing systems could use something like stroke composition or phonetic transliteration, and then the text would be displayed in a screen before being recorded/sent.

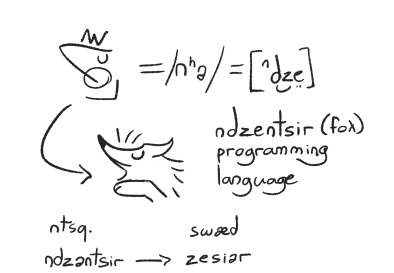

Also the idea of ndzəntsi(a)r/zesiyr is based on C. At the time, the phonology i was using for ntsuqatir didn't have a /s/ phoneme, and so i picked one of the closest phonemes, /ⁿdz/, which evolves to /z/ in swædir, which gave the [ⁿdzə] or [ze] programming language its name. Coming up with a word for fox, based on the character's similarity was an afterthought. It was mostly created as a prop i could use in art to make the world feel like having an identity of its own, than a serious attempt at having a programming language. Making an esolang out of it would be going way out of the way since i found im not that interested in the technical aspects for their own sake, and having computers was a purely aesthetics thing that i repurposed into a more serious cultural artifact like mail, something that would make sense in storytelling and worldbuilding.

Now that it exists as a concept, though, i imagine it being used in academic and industrial setting, mostly confined to the nation where it was created. Also i don't think they have the needs or computing power for things like the more recent programming languages - in-world computers haven't changed much since their inception, and aren't likely to. No species or culture there has a very competitive or expansionist mindset, there isn't a scarcity of resources since the world is large and sparsely populated, and there isn't some driving force like capitalism creating an artificial demand such as moore's law. They are very creative, however, and computers and telecommunications were the ways they found to overcome the large distances between main cities, so they can better help each other in times of need.

#answered#ask#conlang i guess??#thank you for wanting to read me yapping about language and worldbuilding#also sorry if this is a bit disappointing to read - i don't have a very positivist/romantic outlook on computing technology anymore#but i tried to still make something nice out of it by shaping their relationship with technology to be different than ours#since i dedicated so much time to that aspect of the worldbuilding early on

13 notes

·

View notes

Note

2, 5 12, 27 with (of course) River Gale?

2. Favorite canon thing about this character?

i think it has to be that scene where they talk about register in english and how all our most formal syntax is latin and greek in origin and our least formal is usually germanic. just, the fact that they know that and are willing to randomly spend several minutes explaining it. i fell instantly in love

5. What's the first song that comes to mind when you think about them?

someone put 'Simulation Swarm' by Big Thief in a mars house playlist and i haven't stopped thinking about it since. river and aubrey....

like wrong gender obviously but i think of them when i hear it. so.

12. What's a headcanon you have for this character?

i put this in a fic once already but i just feel in my heart that they know fortran. the programming language from 1957 bizarrely still in use among engineers. like, if you asked them to solve any problem in fortran, they could do it, and the solution would be bizarre, totally unreadable to anyone else, and LIGHTNING fast. i have programming style headcanons for every pulley character but this one is my fave

27. FREEBIE QUESTION!!

CARTE BLANCHE TO TALK ABOUT RIVER GALE!? LET'S GOOOO

i'm putting this under a readmore because i can't stop yapping.

i have so much to say. i could write individual essays about their politics, their gender, and their relationship to their sibling, but i want to talk about the argument they have with january sort of mid-book

i love this argument because they both have a point! january has a point bc gale's policies suck and because gale is obviously super privileged in their education. when i first read it, i thought gale was being kind of obtuse for not acknowledging that, but...

on like my third reread i realized i've been in this argument on gale's side lmao. i'd like to think i'd respond more maturely now that i've been there a couple times, but in the moment, "i can't argue with you about this because you're too smart for me" is really deeply unpleasant to hear! it doesn't feel like the other person is pointing out privilege, it feels like they're saying "i can't connect or engage with you on this because i (a) don't trust you enough to believe you'll listen to me or pay enough attention to see my argument when i don't express it perfectly, in fact i think you don't care at all about what i have to say, and (b) you are different from me in a way that is inherent and immutable, so much so that i can't even discuss things with you." and it's easy to see why that hurts!

and gale probably feels that last one way more because their halo reading means their brain is actually, physically different from everyone else's! january is 100% valid for feeling overwhelmed in this moment and for these reasons, but to gale, who connects with people by debating them bc they care about other people's opinions, it probably feels like a moderately insulting rejection.

i also think gale is the sort of person who views a debate or an argument as an opportunity for synthesis—disagreeing with someone and pointing out the counterarguments isn't to tear their argument down, it's to make it stronger by giving it an opportunity to address its flaws!! it's to arrive at the truth together!! it's a way to grow closer to people by learning how they think!! but we can see here that january views it as a violent takedown, which is completely valid of him given what gale and their pr team did to him at the beginning of the book!! that WAS a violent takedown, it lost him his job, and it was his first introduction to gale, so it's totally reasonable of him to assume that's how they work all the time, even though it's extremely not!!

i just love gale so much, because they obviously care so much about other people when the other people are right there in front of them. and they try really hard to tone the argumentative curiosity down, because they know it makes people uncomfortable, but it's their truest way of expressing that care. and it's so easy to misinterpret. and if you really think about it this conversation is kind of tragic for them, especially if you read them as having feelings for january at this point!

tl;dr: i think it's a fantastic character dynamic packed into the span of a short conversation! thank you for reading my impromptu river gale essay <3

#i also think it's really funny if like.... its the 2100s or 2200s. mars has been settled#computing has obviously been completely revolutionized bc ai consciousness exists and is not considered too costly for mars' limited power#fortran is still kicking. hilarious#the mars house#sorry abt the essay bluejay............ thank you for the ask :')

14 notes

·

View notes

Text

Assorted system headcanons-

It’s been a long time since I’ve done a headcanon dump and I now think I’m marginally better at in system/grid writing so here we go

“It/it’s” is commonly used interchangeably with gendered pronouns for programs. It’s a way to reflect that they’re not human and gender if anything is more a display/user reflection thing.

Programming languages = literal languages (this is somewhat canon, there’s background characters talking about needed to translate “Don’t speak Fortran”- there was a post a while back)

There’s more gore on the Grid because it’s not running properly, it’s a form of lag! So there’s gore, missing limbs etc instead of a quicker de-resolution and re dispersement of energy. Think of it like how the wall on the game grid stayed unfixed in the original movie- the energy is focused elsewhere.

Not original stated by me but similarly to the gore Grid programs have simpler circuitry as they lack purpose, it got worse as time went on. Started as sleek aesthetic but as the system sat dormant it became less and less. I don’t know I just think this is sad and great to play around with.

Programs will occasionally make/produce noises. Similar to the bleeps and random electronic sound in the world around them, there’s a whole non-language based communication. (Animalistic isn’t the right term, inhuman? Like someone just makes a really angry dial up tone at you)

Pings. Also not mine originally but I fully believe this headcanon.

Counterpart’s are connected via code, also not originally mine. However the idea of being literally apart of someone you love? Able to just look at each other and know what to do/say is so so good. (At least in the Encom system)

There’s multiple forms of transport beams, some of them will have a ship attach and ride it while others a program can step into and is sort of like a tube system.

They totally have a people mover style transport as well, I just think this is funny but also a homage to the overlay the 82’ film did.

#they should get to make little noises- as a treat#tron#tronblr#tron 1982#tron legacy#Tron lore#tron headcanons#tron programs#Encom system#the grid#tron uprising- still need to watch but it takes place on the grid

56 notes

·

View notes

Text

There’s a funny dichotomy between the quotas for storage space on the clusters I use for work.

On one hand I have over 4 TB of simulation data sitting on the clusters work drives, with every intention of increasing that and still far from the limit I’m allowed without asking for more.

On the other, I have my home folders. Which for some reason always have their limits set like it’s still 2005. But program configs and code packages are stored in the home folder. When it gets full most programs start breaking as they can’t write a temporary files.

I’m standing there with rm in my outstretched hand. Pointing it between Firefox saying, hands up and shaking, “you can’t delete .mozilla, your history and favorites are in there.” And anaconda, with its arms crossed, staring me in the face, “well if you can’t handle 800 MB of random package files and 500 MB for the environment maybe you should go back to Fortran?”

I look down to du in my other hand.

“They’ve got to go for the good of the directory. Delete them,” it whispers.

2 notes

·

View notes

Text

I decided to write this article when I realized what a great step forward the modern computer science learning has done in the last 20 years. Think of it. My first “Hello, world” program was written in Sinclair BASIC in 1997 for КР1858ВМ1r This dinosaur was the Soviet clone of the Zilog Z80 microprocessor and appeared on the Eastern Europe market in 1992-1994. I didn’t have any sources of information on how to program besides the old Soviet “Encyclopedia of Dr. Fortran”. And it was actually a graphic novel rather than a BASIC tutorial book. This piece explained to children how to sit next to a monitor and keep eyesight healthy as well as covered the general aspects of programming. Frankly, it involved a great guesswork but I did manage to code. The first real tutorial book I took in my hands in the year of 2000 was “The C++ Programming Language” by Bjarne Stroustrup, the third edition. The book resembled a tombstone and probably was the most fundamental text for programmers I’d ever seen. Even now I believe it never gets old. Nowadays, working with such technologies as Symfony or Django in the DDI Development software company I don’t usually apply to books because they become outdated before seeing a printing press. Everyone can learn much faster and put a lesser effort into finding new things. The number of tutorials currently available brings the opposite struggle to what I encountered: you have to pick a suitable course out of the white noise. In order to save your time, I offer the 20 best tutorials services for developers. Some of them I personally use and some have gained much recognition among fellow technicians. Lynda.com The best thing about Lynda is that it covers all the aspects of web development. The service currently has 1621 courses with more than 65 thousand videos coming with project materials by experts. Once you’ve bought a monthly subscription for a small $30 fee you get an unlimited access to all tutorials. The resource will help you grow regardless your expertise since it contains and classifies courses for all skill levels. Pluralsight.com Another huge resource with 1372 courses currently available from developers for developers. It may be a hardcore decision to start with Pluralsight if you’re a beginner, but it’s a great platform to enhance skills if you already have some programming background. A month subscription costs the same $30 unless you want to receive downloadable exercise files and additional assessments. Then you’ll have to pay $50 per month. Codecademy.com This one is great to start with for beginners. Made in an interactive console format it leads you through basic steps to the understanding of major concepts and techniques. Choose the technology or language you like and start learning. Besides that, Codecademy lets you build websites, games, and apps within its environment, join the community and share your success. Yes, and it’s totally free! Probably the drawback here is that you’ll face challenges if you try to apply gained skills in the real world conditions. Codeschool.com Once you’ve done with Codecademy, look for something more complicated, for example, this. Codeschool offers middle and advanced courses for you to become an expert. You can immerse into learning going through 10 introductory sessions for free and then get a monthly subscription for $30 to watch all screencasts, courses, and solve tasks. Codeavengers.com You definitely should check this one to cover HTML, CSS, and JavaScript. Code Avengers is considered to be the most engaging learning you could experience. Interactive tasks, bright characters and visualization of your actions, simple instructions and instilling debugging discipline makes Avengers stand out from the crowd. And unlike other services it doesn’t tie you to schedules allowing to buy either one course or all 10 for $165 at once and study at your own pace. Teamtreehouse.com An all-embracing platform both for beginners and advanced learners. Treehouse

has general development courses as well as real-life tasks such as creating an iOS game or making a photo app. Tasks are preceded by explicit video instructions that you follow when completing exercises in the provided workspace. The basic subscription plan costs $25 per month, and gives access to videos, code engine, and community. But if you want bonus content and videos from leaders in the industry, your pro plan will be $50 monthly. Coursera.org You may know this one. The world famous online institution for all scientific fields, including computer science. Courses here are presented by instructors from Stanford, Michigan, Princeton, and other universities around the world. Each course consists of lectures, quizzes, assignments, and final exams. So intensive and solid education guaranteed. By the end of a course, you receive a verified certificate which may be an extra reason for employers. Coursera has both free and pre-pay courses available. Learncodethehardway.org Even though I’m pretty skeptical about books, these ones are worth trying if you seek basics. The project started as a book for Python learning and later on expanded to cover Ruby, SQL, C, and Regex. For $30 you get a book and video materials for each course. The great thing about LCodeTHW is its focus on practice. Theory is good, but practical skills are even better. Thecodeplayer.com The name stands for itself. Codeplayer contains numerous showcases of creating web features, ranging from programming input forms to designing the Matrix code animation. Each walkthrough has a workspace with a code being written, an output window, and player controls. The service will be great practice for skilled developers to get some tips as well as for newbies who are just learning HTML5, CSS, and JavaScript. Programmr.com A great platform with a somewhat unique approach to learning. You don’t only follow courses completing projects, but you do this by means of the provided API right in the browser and you can embed outcome apps in your blog to share with friends. Another attractive thing is that you can participate in Programmr contests and even win some money by creating robust products. Well, it’s time to learn and play. Udemy.com An e-commerse website which sells knowledge. Everyone can create a course and even earn money on it. That might raise some doubts about the quality, but since there is a lot of competition and feedback for each course a common learner will inevitably find a useful training. There are tens of thousands of courses currently available, and once you’ve bought a course you get an indefinite access to all its materials. Udemy prices vary from $30 to $100 for each course, and some training is free. Upcase.com Have you completed the beginner courses yet? It’s time to promote your software engineer’s career by learning something more specific and complex: test-driven development in Ruby on Rails, code refactoring, testing, etc. For $30 per month you get access to the community, video tutorials, coding exercises, and materials on the Git repository. Edx.org A Harvard and MIT program for free online education. Currently, it has 111 computer science and related courses scheduled. You can enroll for free and follow the training led by Microsoft developers, MIT professors, and other experts in the field. Course materials, virtual labs, and certificates are included. Although you don’t have to pay for learning, it will cost $50 for you to receive a verified certificate to add to your CV. Securitytube.net Let’s get more specific here. Surprisingly enough SecurityTube contains numerous pieces of training regarding IT security. Do you need penetration test for your resource? It’s the best place for you to capture some clues or even learn hacking tricks. Unfortunately, many of presented cases are outdated in terms of modern security techniques. Before you start, bother yourself with checking how up-to-date a training is. A lot of videos are free, but you can buy a premium course access for $40.

Rubykoans.com Learn Ruby as you would attain Zen. Ruby Koans is a path through tasks. Each task is a Ruby feature with missing element. You have to fill in the missing part in order to move to the next Koan. The philosophy behind implies that you don’t have a tutor showing what to do, but it’s you who attains the language, its features, and syntax by thinking about it. Bloc.io For those who seek a personal approach. Bloc covers iOS, Android, UI/UX, Ruby on Rails, frontend or full stack development courses. It makes the difference because you basically choose and hire the expert who is going to be your exclusive mentor. 1-on-1 education will be adapted to your comfortable schedule, during that time you’ll build several applications within the test-driven methodology, learn developers’ tools and techniques. Your tutor will also help you showcase the outcome works for employers and train you to pass a job interview. The whole course will cost $5000 or you can pay $1333 as an enrollment fee and $833 per month unless you decide to take a full stack development course. This one costs $9500. Udacity.com A set of courses for dedicated learners. Udacity has introductory as well as specific courses to complete. What is great about it and in the same time controversial is that you watch tutorials, complete assignments, get professional reviews, and enhance skills aligning it to your own schedule. A monthly fee is $200, but Udacity will refund half of the payments if you manage to complete a course within 12 months. Courses are prepared by the leading companies in the industry: Google, Facebook, MongoDB, At&T, and others. Htmldog.com Something HTML, CSS, JavaScript novices and adepts must know about. Simple and free this resource contains text tutorials as well as techniques, examples, and references. HTML Dog will be a great handbook for those who are currently engaged in completing other courses or just work with these frontend technologies. Khanacademy.org It’s diverse and free. Khan Academy provides a powerful environment for learning and coding simultaneously, even though it’s not specified for development learning only. Built-in coding engine lets you create projects within the platform, you watch video tutorials and elaborate challenging tasks. There is also the special set of materials for teachers. Scratch.mit.edu Learning for the little ones. Scratch is another great foundation by MIT created for children from 8 to 15. It won’t probably make your children expert developers, but it will certainly introduce the breathtaking world of computer science to them. This free to use platform has a powerful yet simple engine for making animated movies and games. If you want your child to become an engineer, Scratch will help to grasp the basic idea. Isn’t it inspirational to see your efforts turning into reality? Conclusion According to my experience, you shouldn’t take more than three courses at a time if you combine online training with some major activity because it’s going to be hard to concentrate. Anyway, I tried to pick different types of resources for you to have a choice and decide your own schedule as well as a subscription model. What services do you usually apply to? Do you think online learning can compete with traditional university education yet? Please, share. Dmitry Khaleev is a senior developer at the DDI Development software company with more than 15 years experience in programming and reverse-engineering of code. Currently, he works with PHP and Symfony-based frameworks.

0 notes

Text

Now imagine you're in my shoes. i date back to the era where you had to write a program (sorry, they say "write code" these days) just to get a computer to do anything. I come from the era where people had to learn languages such as BASIC and FORTRAN.

Put another way, you know you're old if you know exactly what this bit of "code" will do.

10 FOR X = 100 TO 1 STEP -1

20 PRINT X

30 NEXT

this can't be true can it

99K notes

·

View notes

Text

CECS 342 Assignment 5 - Ancient Languages with Fortran 77

The purpose of this assignment is to give you some experience programming with older programming languages. You will be using Fortran 77 for this assignment (and not a newer version of Fortran!). Write a program in Fortran 77 to sort an array of numbers entered from the keyboard and then using binary search, search for a number in the array. If the number is not found then it should ask for…

0 notes

Text

IF YOU INVEST AT 20 AND THE COMPANY IS STARTING TO APPEAR IN THE MAINSTREAM

8x 5% 12. Many of our taboos future generations will laugh at is to start with. But like VCs, they invest other people's money makes them doubly alarming to VCs. If it isn't, don't try to raise money, they try gamely to make the best case, the papers are just a formality. Understand why it's worth investing in. But at each point you know how you're doing. Only a few companies have been smart enough to realize this so far. If you run out of money, you probably never will. Just as our ancestors did to explain the apparently too neat workings of the natural world. Genes count for little by comparison: being a genetic Leonardo was not enough to compensate for having been born near Milan instead of Florence. The last one might be the most plausible ones. And yet a lot of other domains, the distribution of outcomes follows a power law, but in startups the curve is startlingly steep.

The list is an exhaustive one. I can't tell is whether they have any kind of taste. And if so they'll be different to deal with than VCs. The people are the most important of which was Fortran. It is now incorporated in Revenge of the Nerds. I have likewise cavalierly dismissed Cobol, Ada, Visual Basic, the IBM AS400, VRML, ISO 9000, the SET protocol, VMS, Novell Netware, and CORBA, among others. When people first start drawing, for example, because Paypal is now responsible for 43% of their sales and probably more of their growth. We fight less. You tell them only 1 out of 100 successful startups has a trajectory like that, and they have a hard time getting software done. What if some idea would be a remarkable coincidence if ours were the first era to get everything just right. In hacking, this can literally mean saving up bugs. I know that when it comes to code I behave in a way that seems to violate conservation laws.

Few would deny that a story should be like life. Steve Wozniak wanted a computer, Google because Larry and Sergey found, there's not much of a market for ideas. For a painter, a museum is a reference library of techniques. For a long time to work on as there is nothing so unfashionable as the last, discarded fashion, there is something even better than C; and plug-and-chug undergrads, who are both hard to bluff and who already believe most other investors are conventional-minded drones doomed always to miss the big outliers. As in any job, as you continue to design things, these are not just theoretical questions. But evidence suggests most things with titles like this are linkbait. Almost every company needs some amount of pain. I'd find something in almost new condition for a tenth its retail price at a garage sale.

Once you phrase it that way, the answer is obvious: from a job. A company that grows at 1% a week will in 4 years be making $25 million a month. You feel this when you start. Starting a startup is committing to solve any specific problem; you don't know that number, they're successful for that week. For example, when Leonardo painted the portrait of Ginevra de Benci, their attention is often immediately arrested by it, because our definition of success is that the business guys choose people they think are good programmers it says here on his resume that he's a Microsoft Certified Developer but who aren't. After they merged with X. Once investors like you, you'll see them reaching for ideas: they'll be saying yes, and you have to understand what they need. Just wait till all the 10-room pensiones in Rome discover this site. You're better off if you admit this up front, and write programs in a way that allows specifications to change on the fly. Working from life is a valuable tool in painting too, though its role has often been misunderstood. The founders can't enrich themselves without also enriching the investors. You're committing not just to intelligence but to ability in general, you can not only close the round faster, but now that convertible notes are becoming the norm, actually raise the price to reflect demand.

Most investors are genuinely unclear in their own minds why they like or dislike startups. Actor too is a pole rather than a threshold. But here again there's a tradeoff between smoothness and ideas. Starting startups is not one of them. The classic way to burn through cash is by hiring a lot of this behind the scenes stuff at YC, because we invest in such a large number of companies, and we invest so early that investors sometimes need a lot of founders are surprised by it. In the original Java white paper, Gosling explicitly says Java was designed not to be too difficult for programmers used to C. And this team is the right model, because it coincided with the amount. Those are the only things you need at first.

Not always. And so an architect who has to build on a difficult site, or a programming language is obviously doesn't know what these things are, either. One reason this advice is so hard to follow is that people don't realize how hard it was to get some other company to buy it. You can see that in the back of their minds, they know. But that's still a problem for big companies, because they seem so formidable. It's an interesting illustration of an element of the startup founder dream: that this is a coincidence. They try to convince with their pitch. In most fields the great work is done early on.

This is supposed to be the default plan in big companies. The people you can say later Oh yeah, we had to interrupt everything and borrow one of their fellow students was on the Algol committee, got conditionals into Algol, whence they spread to most other languages. This is in contrast to Fortran and most succeeding languages, which distinguish between expressions and statements. And if it isn't false, it shouldn't be suppressed. I mentioned earlier that the most successful startups seem to have done it by fixing something that they thought ugly. In 1989 some clever researchers tracked the eye movements of radiologists as they scanned chest images for signs of lung cancer. Darwin himself was careful to tiptoe around the implications of his theory. Running a business is so much more enjoyable now. Don't worry what people will say. Growth is why it's a rational choice economically for so many founders to try starting a startup consists of. If there are x number of customers who'd pay an average of $y per year for what you're making, then the total addressable market, or TAM, of your company, if they can get DARPA grants.

Fortunately, more and more startups will. Good design is often slightly funny. Unconsciously, everyone expects a startup to work on technology, or take venture funding, or have some sort of exit. And I'm especially curious about anything that's forbidden. Angels would invest $20k to $50k apiece, and VCs usually a million or more. Nowadays Valley VCs are more likely to take 2-3x longer than I always imagine. In the mid twentieth century there was a lot less than the 30 to 40% of the company you usually give up in one shot. A deals would prefer to take half as much stock, and then just try to hit it every week. What's wrong with having one founder? Within the US car industry there is a kind of final pass where you caught typos and oversights.

#automatically generated text#Markov chains#Paul Graham#Python#Patrick Mooney#Java#Valley#price#Many#everything#VRML#things#founder#startups#trajectory#count#generations#default#attention#sale#undergrads#lot#way#anything#eye#languages#car#exit#distribution

0 notes

Text

So far I've found despairingly little information on CHECO. Cybersyn itself has four primary sub-components and each one has a lot more information out there.

Working from Medina's 2006 historical paper on Project Cybersyn as a jumping board, all I've been able to glean yet is that CHECO, the economic simulation program used by Cybersyn and the Allende government, made use of the DYNAMO compiler developed at MIT back in the 50s and 60s. Given the timing, I believe it was specifically the DYNAMO II variant. Cybersyn had two mainframes at their disposal via the state, an IBM 360/50 which had excellent FORTRAN support and a Burroughs 3500 which did not. Both however did support Algol-based languages, which DYNAMO II was written in a dialect of. Thus I believe it's sensible to assume DYNAMO II was used.

DYNAMO (not to be confused with a much newer language of the same name) itself has fallen into relative obscurity, and does not appear to have been used very much past the 1980s. It is a simulation language designed around the use of difference equations, and had several more successful competing languages. In an essential sense, DYNAMO really is only a tool for calculating large numbers of difference equations and generating tables and graphs for the user.

Generally, the work flow is to write a model of a system to simulate and then compile it. Provide data to the model and DYNAMO generates the output requested. DYNAMO while being a continuous simulation, typically was used in a discrete manner. Using levels and rates, computations can model systems albeit relatively simple and crude ones.

According to Medina 2006, CHECO was worked on both in England and in Chile with the Chilean team having reservations about the results. Beer believed that the issue was using historical rather than real-time inputs. Personally, I believe that the weakness of CHECO is directly a result of the crudeness of modeling tools of the time period, and DYNAMO more specifically. In fairness however, it was a very bold project and DYNAMO was more a tool to manage business resources rather than a sandbox tool to design an entire economy. Of the four components of Cybersyn, CHECO is likely the weakest and least developed even when acknowledging that Opsroom (the thing most people would recognise) was never actually completed or operational.

You can find both the DYNAMO II User Manual and the related text Industrial Dynamics on the Internet Archive if you want to look at the technology behind CHECO a little deeper. Unfortunately, it appears there are not any more modern implementations to toy around with currently.

0 notes

Text

The story of BASIC’s development began in 1963, when Kemeny and Kurtz, both mathematics professors at Dartmouth, recognized the need for a programming language that could be used by non-technical students. At the time, most programming languages were complex and required a strong background in mathematics and computer science. Kemeny and Kurtz wanted to create a language that would allow students from all disciplines to use computers, regardless of their technical expertise.

The development of BASIC was a collaborative effort between Kemeny, Kurtz, and a team of students, including Mary Kenneth Keller, John McGeachie, and others. The team worked tirelessly to design a language that was easy to learn and use, with a syntax that was simple and intuitive. They drew inspiration from existing programming languages, such as ALGOL and FORTRAN, but also introduced many innovative features that would become hallmarks of the BASIC language.

One of the key innovations of BASIC was its use of simple, English-like commands. Unlike other programming languages, which required users to learn complex syntax and notation, BASIC used commands such as “PRINT” and “INPUT” that were easy to understand and remember. This made it possible for non-technical users to write programs and interact with the computer, without needing to have a deep understanding of computer science.

BASIC was first implemented on the Dartmouth Time-Sharing System, a pioneering computer system that allowed multiple users to interact with the computer simultaneously. The Time-Sharing System was a major innovation in itself, as it allowed users to share the computer’s resources and work on their own projects independently. With BASIC, users could write programs, run simulations, and analyze data, all from the comfort of their own terminals.

The impact of BASIC was immediate and profound. The language quickly gained popularity, not just at Dartmouth, but also at other universities and institutions around the world. It became the language of choice for many introductory programming courses, and its simplicity and ease of use made it an ideal language for beginners. As the personal computer revolution took hold in the 1970s and 1980s, BASIC became the language of choice for many hobbyists and enthusiasts, who used it to write games, utilities, and other applications.

Today, BASIC remains a popular language, with many variants and implementations available. While it may not be as widely used as it once was, its influence can still be seen in many modern programming languages, including Visual Basic, Python, and JavaScript. The development of BASIC was a major milestone in the history of computer science, as it democratized computing and made it accessible to a wider range of people.

The Birth of BASIC (Dartmouth College, August 2014)

youtube

Friday, April 25, 2025

#basic programming language#computer science#dartmouth college#programming history#software development#technology#ai assisted writing#Youtube

7 notes

·

View notes

Text

Computer science has undergone remarkable transformations since its inception, shaping the way we live, work, and interact with technology. Understanding this evolution not only highlights the innovations of the past but also underscores the importance of education in this ever-evolving field. In this blog, we’ll explore key milestones in computer science and the learning opportunities available, including computer science training in Yamuna Vihar and Computer Science Training Institute in uttam nagar .

The Early Years: Foundations of Computing

The story of computer science begins in the mid-20th century with the development of the first electronic computers. The ENIAC, one of the earliest general-purpose computers, showcased the capabilities of machine computation. However, programming at that time required a deep understanding of machine language, which was accessible only to a select few.

Milestone: High-Level Programming Languages

The 1950s marked a pivotal moment with the introduction of high-level programming languages like FORTRAN and COBOL. These languages allowed developers to write code in a more human-readable form, significantly lowering the barrier to entry for programming. This shift made software development more approachable and laid the groundwork for future innovations.

The Personal Computer Revolution

The 1970s and 1980s ushered in the era of personal computing, with companies like Apple and IBM bringing computers into homes and offices. This democratization of technology changed how people interacted with computers, leading to the development of user-friendly interfaces and applications.

Milestone: The Internet Age

The rise of the internet in the late 20th century transformed communication and information sharing on a global scale. The introduction of web browsers in the 1990s made the internet accessible to the masses, resulting in an explosion of online content and services. This era emphasized the importance of networking and laid the foundation for the digital economy.

nd Advanced Technologies

As computing technologies became more advanced, the need for specialized knowledge grew. Understanding data structures and algorithms became essential for optimizing code and improving software performance.

Specialization a

For those looking to enhance their skills, the Data Structure Training Institute in Yamuna Vihar offers comprehensive programs focused on these critical concepts. Mastering data structures is vital for aspiring developers and can significantly impact their effectiveness in real-world applications.

Milestone: Mobile Computing and Applications

The advent of smartphones in the early 2000s revolutionized computing once again. Mobile applications became integral to daily life, prompting developers to adapt their skills for mobile platforms. This shift highlighted the need for specialized education in app development and user experience design.

Current Trends: AI, Big Data, and Cybersecurity

Today, fields like artificial intelligence (AI), big data, and cybersecurity are at the forefront of technological innovation. AI is transforming industries by enabling machines to learn from data, while big data analytics provides insights that drive decision-making.

To prepare for careers in these dynamic fields, students can enroll in an advanced diploma in computer application in Uttam Nagar. This program equips learners with a strong foundation in software development, data management, and emerging technologies.

Additionally, Computer Science Classes in Uttam Nagar offer tailored courses for those seeking to specialize in specific areas, ensuring that students are well-prepared for the job market.

Conclusion

The evolution of computer science has been marked by significant milestones that have reshaped our technological landscape. As the field continues to advance, the demand for skilled professionals is higher than ever. By pursuing education in computer science—whether through computer science training in Yamuna Vihar, specialized data structure courses, or advanced diploma programs—you can position yourself for success in this exciting and ever-changing industry.

Embrace the opportunities available to you and become a part of the future of technology!

#computer science classes#datascience#computer science training in Yamuna Vihar#Computer Science Classes in Uttam Nagar

0 notes

Text

Listened to Videos on Step 48

I probably started with computers before Steve Wozniak. My very first experience was in 1964, taking a Fortran course at Drexel University. Later that year I had a co-operative education quarter with IBM in Manhattan. That's where I really learned how to code, using Autocoder (an assembly language) on the IBM 1401. The next year I was earning money writing school grading programs in Autocoder. My first job out of college in 1967 was writing Fortran in the Apollo Program. By this time Wozniak had been fooling around with electronics for 6 or 7 years.

0 notes

Text

software Development

The Evolution and Art of Software Development

Introduction

Software development is more than just writing code; it's a dynamic and evolving field that marries logic with creativity. Over the years, it has transformed from simple programming tasks into a complex discipline encompassing a range of activities, including design, architecture, testing, and maintenance. Today, software development is central to nearly every aspect of modern life, driving innovation across industries from healthcare to finance, entertainment to education.

The Journey of Software Development

1. The Early Days: From Code to Systems

In the early days of computing, software development was a niche skill practiced by a few experts. Programming languages were rudimentary, and development focused on solving specific, isolated problems. Early software was often developed for specific hardware, with little consideration for reusability or scalability. Programs were typically written in low-level languages like Assembly, making the development process both time-consuming and prone to errors.

2. The Rise of High-Level Languages

The development of high-level programming languages such as FORTRAN, COBOL, and later, C, marked a significant shift in software development. These languages allowed developers to write more abstract and readable code, which could be executed on different hardware platforms with minimal modification. This period also saw the emergence of software engineering as a formal discipline, with a focus on methodologies and best practices to improve the reliability and maintainability of software.

3. The Advent of Object-Oriented Programming

The 1980s and 1990s witnessed another leap forward with the advent of object-oriented programming (OOP). Languages like C++, Java, and Python introduced concepts such as classes, inheritance, and polymorphism, which allowed developers to model real-world entities and their interactions more naturally. OOP also promoted the reuse of code through the use of libraries and frameworks, accelerating development and improving code quality.

4. The Agile Revolution

In the early 2000s, the software development landscape underwent a seismic shift with the introduction of Agile methodologies. Agile emphasized iterative development, collaboration, and flexibility over rigid planning and documentation. This approach allowed development teams to respond more quickly to changing requirements and deliver software in smaller, more manageable increments. Agile practices like Scrum and Kanban have since become standard in the industry, promoting continuous improvement and customer satisfaction.

5. The Age of DevOps and Continuous Delivery

Today, software development is increasingly intertwined with operations, giving rise to the DevOps movement. DevOps emphasizes automation, continuous integration, and continuous delivery (CI/CD), enabling teams to release software updates rapidly and reliably. This approach not only shortens development cycles but also improves the quality and security of software. Cloud computing and containerization technologies like Docker and Kubernetes have further enhanced DevOps, allowing for scalable and resilient software systems.

The Art of Software Development

While software development is rooted in logic and mathematics, it also requires a creative mindset. Developers must not only solve technical problems but also design intuitive user interfaces, create engaging user experiences, and write code that is both efficient and maintainable. The best software solutions are often those that balance technical excellence with user-centric design.

1. Design Patterns and Architecture

Effective software development often involves the use of design patterns and architectural principles. Design patterns provide reusable solutions to common problems, while architectural patterns guide the overall structure of the software. Whether it's a microservices architecture for a large-scale web application or a Model-View-Controller (MVC) pattern for a desktop app, these frameworks help developers build robust and scalable software.

2. Testing and Quality Assurance

Quality assurance is another critical aspect of software development. Writing tests, whether unit tests, integration tests, or end-to-end tests, ensures that the software behaves as expected and reduces the likelihood of bugs. Automated testing tools and practices like Test-Driven Development (TDD) help maintain code quality throughout the

1 note

·

View note

Text

AI in Automatic Programming: Will AI Replace Human Coders?

The software development industry is not immune to the profound effects of artificial intelligence (AI). One of the areas where AI is having the greatest impact on productivity is automatic programming. It wasn’t always the case that automatic programming included the creation of programs by another program. It gained new connotations throughout time.

In the 1940s, it referred to the mechanization of the formerly labor-intensive operation of punching holes in paper tape to create punched card machine programming.In later years, it meant converting from languages like Fortran and ALGOL down to machine code.

Artificial intelligence (AI) coding tools like GitHub Copilot, Amazon CodeWhisperer, ChatGPT, Tabnine, and many more are gaining popularity because they allow developers to automate routine processes and devote more time to solving difficult challenges.

Synthesis of a program from a specification is the essence of automatic programming. Automatic programming is only practical if the specification is shorter and simpler to write than the corresponding program in a traditional programming language.

In automated programming, one software uses a set of guidelines provided by another program to build its code.

The process of writing code that generates new programs continues. One may think of translators as automated programs, with the specification being the source language (a higher-level language) being translated into the target language (a lower-level language).

This method streamlines and accelerates software development by removing the need for humans to manually write repetitive or difficult code. Simplified inputs, such as user requirements or system models, may be translated into usable programs using automatic programming tools.

Few AI Coding Assistants

GitHub Copilot

Amazon CodeWhisperer

Codiga

Bugasura

CodeWP

AI Helper Bot

Tabnine

Reply

Sourcegraph Cody

AskCodi

Unlocking the Potential of Automatic Programming

AI can do in one minute what used to take an engineer 30 minutes to do.

The term “automatic programming” refers to the process of creating code without the need for a human programmer, often using more abstract requirements. Knowledge of algorithms, data structures, and design patterns underpins the development of software, whether it’s written by a person or a computer.

Also, new modules may be easily integrated into existing systems thanks to autonomous programming, which shortens product development times and helps businesses respond quickly to changing market needs.

In many other contexts, from data management and process automation to the creation of domain-specific languages and the creation of software for specialized devices, automated programming has shown to be an invaluable tool.

Its strength is in situations when various modifications or variants of the same core code are required. Automatic programming encourages innovation and creativity by facilitating quick code creation with minimal human involvement, giving developers more time to experiment with new ideas, iterate on designs, and expand the boundaries of software technology.

How to Get Started with AI Code Assistant?

Have you thought of using artificial intelligence coding assistance to turbocharge your coding skills?

Artificial intelligence can save programmers’ time for more complicated problem-solving by automating routine, repetitive processes. Developers may make use of AI algorithms that can write code to shorten iteration times and boost output.

You can now write code more quickly and accurately, leaving more time for you to think about innovative solutions to the complex problems you’re trying to solve.

In Visual Studio Code, for instance, you can utilize Amazon CodeWhisper to create code by just commenting on what you want it to do; the integrated development environment (IDE) will then offer the full code snippet for you to use and modify as necessary

0 notes

Text

Mistral AI Codestral Platform Debuts On Google Vertex AI

Codestral

Google cloud present first code model, Codestral. An open-weight generative AI model specifically created for code generation jobs is called Codestral. Through a common instruction and completion API endpoint, it facilitates developers’ writing and interaction with code. It may be used to create sophisticated AI apps for software developers as it becomes proficient in both coding and English.

A model proficient in more than 80 programming languages

More than 80 programming languages, including some of the most widely used ones like Python, Java, C, C++, JavaScript, and Bash, were used to teach Codestral. It works well on more specialised ones as well, like as Swift and Fortran. It can help developers with a wide range of coding environments and projects thanks to its extensive language base.

Because Codestral can construct tests, finish coding functions, and finish any unfinished code using a fill-in-the-middle approach, it saves developers time and effort. Engaging with Codestral can enhance a developer’s coding skills and lower the likelihood of mistakes and glitches.

Raising the Bar for Performance in Code Generation

Activity. Compared to earlier models used for coding, Codestral, as a 22B model, sets a new benchmark on the performance/latency space for code creation.Image Credit to Google cloud

Python. Codestral test Codestral’s Python code generation capability using four benchmarks: HumanEval pass@1, MBPP sanitised pass@1, CruxEval for Python output prediction, and RepoBench EM for Codestral’s Long-Range Repository-Level Code Completion.

SQL: Spider was used to benchmark Codestral’s SQL performance.

Mistral Codestral

Get Codestral and give it a try

You can use it for testing and study because it is a 22B open-weight model licensed under the new Mistral AI Non-Production License. HuggingFace offers a download for Codestral.

By contacting the team, commercial licenses are also available on demand if you like to use the model for your business.

Utilise Codestral through its specific endpoint

Codestral,Mistral AI is a new endpoint that is added with this edition. Users that utilise Google cloud Fill-In-the-Middle or Instruct routes within their IDE should choose this destination. This endpoint’s API Key is controlled personally and is not constrained by the standard organisation rate limitations. For the first eight weeks of its test program, this endpoint will be available for free usage, but it will be behind a waitlist to guarantee high-quality service. Developers creating applications or IDE plugins where users are expected to provide their own API keys should use this endpoint.

Utilise Codestral to build on the Platforme

Additionally, it is instantly available via the standard API endpoint, api.mistral.ai, where requests are charged on a token basis. Research, bulk enquiries, and third-party application development that exposes results directly to consumers without requiring them to bring their own API keys are better suited uses for this endpoint and integrations.

By following this guide, you can register for an account on la Plateforme and begin using Codestral to construct your applications. Codestral is now accessible in Google self-deployment offering, just like all of Google cloud other models: get in touch with sales.

Engage Codestral through le Chat

Mistral releasing Codestral in an instructional version, which you may currently use with free conversational interface, Le Chat. Developers can take advantage of the possibilities of the model by interacting with Codestral in a natural and intuitive way. Google cloud consider Codestral as a fresh step towards giving everyone access to code generation and comprehension.

Use Codestral in your preferred environment for building and coding

In collaboration with community partners, Google cloud made popular technologies for AI application development and developer productivity available to Codestral.

Frameworks for applications. As of right now, Codestral is integrated with LlamaIndex and LangChain, making it simple for users to create agentic apps using Codestral.

Integration between JetBrains and VSCode. Proceed with the help of dev and Tabnine, developers can now generate and converse with code using Codestral inside of the VSCode and JetBrains environments.

Codestral Mistral AI

Google cloud is pleased to announce today that Codestral Mistral AI’s first open-weight generative AI model specifically created for code generation tasks is now available as a fully-managed service on Google Cloud, making it the first hyperscaler to provide it. With the use of a common instruction and completion API endpoint, Codestral facilitates the writing and interaction of code by developers. It is available for use in Vertex AI Model Garden right now.

Furthermore, Google cloud are excited to announce that the most recent large language models (LLMs) from Mistral AI have been added to Vertex AI Model Garden. These LLMs are widely accessible today through a Model-as-a-Service (MaaS) endpoints:

Mistral Large 2: The flagship model from Mistral AI, the Mistral Large 2, has the highest performance and most adaptability of any model the firm has released to date.

Mistral Nemo: For a small fraction of the price, this 12B model offers remarkable performance.

The new models are excellent at coding, math, and multilingual activities (English, French, German, Italian, and Spanish). As a result, they are perfect for a variety of downstream tasks, such as software development and content localisation. Notably, Codestral is well-suited for jobs like test generation, documentation, and code completion. Model-as-a-Service allows you to access the new models with minimal effort and without the need for infrastructure or setup.

With these updates, Google Cloud remains dedicated to providing open and adaptable AI ecosystems that enable you to create solutions that are precisely right for you. Google Cloudpartnership with Mistral AI is evidence of Google Cloud transparent methodology in a cohesive, enterprise-ready setting. A fully-managed Model-as-a-service (MaaS) offering is available from Vertex AI, which offers a carefully selected selection of first-party, open-source, and third-party models, many of which include the recently released Mistral AI models. With MaaS, you can customise it with powerful development tools, easily access it through an API, and select the foundation model that best suits your needs all with the ease of a single bill and enterprise-grade security on Google Cloud fully-managed infrastructure.

Mistral AI models are being tried and adopted using Google Cloud

Vertex AI from Google Cloud is an all-inclusive AI platform for testing, modifying, and implementing foundation models. With the additional 150+ models already accessible on Vertex AI Model Garden, along with Mistral AI’s new models, you’ll have even more choices and flexibility to select the models that best suit your demands and budget while keeping up with the ever-increasing rate of innovation.

Try it with assurance

Discover Mistral AI models in Google Cloud user-friendly environment with straightforward API calls and thorough side-by-side comparisons. Google cloud take care of the infrastructure and deployment details for you.

Adjust the models to your benefit

Utilise your distinct data and subject expertise to fine-tune Mistral AI’s foundation models and provide custom solutions. MaaS will soon allow for the fine-tuning of Mistral AI models.

Create and manage intelligent agents

Utilising Vertex AI’s extensive toolkit, which includes LangChain on Vertex AI, create and manage agents driven by Mistral AI models. Use Genkit’s Vertex AI plugin to incorporate Mistral AI models into your production-ready AI experiences.

Transition from experiments to real-world use

Use pay-as-you-go pricing to deploy your Mistral AI models at scale without having to worry about infrastructure management. Additionally, you may keep capacity and performance constant with Provisioned Throughput, which will be accessible in the upcoming weeks. Naturally, make use of top-notch infrastructure that was designed with AI workloads in mind.

Deploy with confidence

Use Google Cloud’s strong security, privacy, and compliance protections to deploy with confidence, knowing that your data and models are protected at every turn.

Start Using Google Cloud’s Mistral AI models now

Google is dedicated to giving developers simple access to the most cutting-edge AI features. Google Cloud collaboration with Mistral AI is evidence of both companies’ dedication to provide you access to an open and transparent AI ecosystem together with cutting-edge AI research. To maintain their customers at the forefront of AI capabilities, we’ll keep up Google Cloud tight collaboration with Mistral AI and other partners.

Visit Model Garden (Codestral, Large 2, Nemo) or the documentation to view the Mistral AI models. To find out more about the new models, see Mistral AI’s announcement. The Google Cloud Marketplace (Codestral, Large 2, and Nemo) offers the Mistral AI models as well.

Read more on govindhtech.com

#MistralAI#AI#Codestral#AIapplication#VertexAI#generativeAI#AIapps#largelanguagemodels#llm#AImodel#AIplatform#news#technews#technology#technologynews#technologytrends#govindhtech

0 notes